maybe AI is being used as a grifting tool but at the same time could have some relatively good use cases, maybe your method of making questions could be too harsh? what kind of questions you ask that apparently annoy the users? what kind of venting you make that bothers people so much in your opinion?

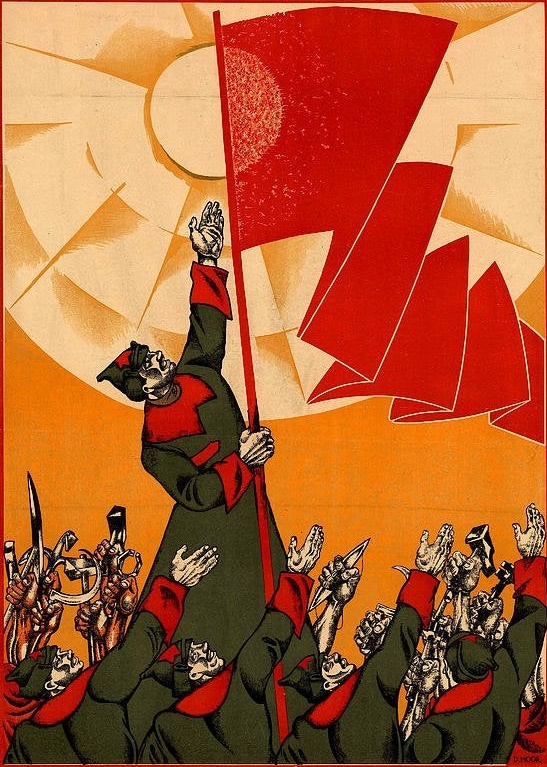

Obligatory Redsails/Pol Clarissou article:

https://redsails.org/artisanal-intelligence/

Marx on artisanal reaction:

https://www.marxists.org/archive/marx/works/download/pdf/18th-Brumaire.pdf

The thing that hurt me the most was that certain hexbear users I looked up to before turned out to be my biggest haters. And so vile. As soon as it’s against “acceptable targets” they become indistinguishable from reactionaries.

I know they didn’t change, I changed, but I still feel betrayed. They tricked me into fighting for copyright, the thing I hated my whole life.

I think it’s better when they are mask off though, even if it hurts.

For some people, hating AI has become their whole identity.

AI is sick. Deepmind solved protein folding.

Yes slop is a real problem but people who hate on or dismiss AI reflexively are being extremely ignorant and short sighted. Unfortunately it’s most people on my beloved hexbear

AI for protein folding and LLM chatbots are significantly different I think. At least the first one was created for one clear scientific purpose.

Significantly how? Both LLMs and AlphaFold are transformer-based neural networks. The LLM chatbot is trained on sequences of words, and AlphaFold is trained on sequences of amino acids. Certainly training AlphaFold to make real amino acid chains was ‘easier’ because we know how they’re formed so it only has so many sequences it can produce and there’s a checklist to determine whether the sequence it produced is real or impossible, so it’s also easier to have it produce a reliable output and makes it very good at a specific task, but they both work the same under the hood. Word prediction LLMs can’t have that deterministic output because we use words for so many different things. It would be like asking a person to only ever communicate in poetry and no other way.

one clear scientific purpose

Computer scientists in academia are using Deepseek to solve new problems in new ways too. They especially like Deepseek and Chinese models because they’re open-weights and don’t obfuscate any of their inner workings (such as the reasoning chain), so they can fine-tune them to their specific needs.

I have to assume the purpose of amino acids wasn’t so clear when we first found out about them and before we set out to investigate and, through extensive research and testing found out how they work and what they actually do. It’s on us to discover the laws of the universe, they don’t come to us beamed from heaven straight into our brain.

Well, at least they differ in that AlphaFold has a specific goal and we can verify it, perhaps not easily, and it has practical scientific benefits, while the LLM trains to solve all tasks at once and even unknown which ones.

LLMs don’t train to “solve” anything. They’re just sequence predictors. They predict the next item in sequences of words, that’s it. Some predict better than others in specific scenarios.

Through techniques like RAG and multi-model frameworks you can tailor the output to fit specific tasks or use cases. This is very powerful for automating routine workflows.

You can also verify output of chatbot or artbot, even easier, for example i have no clue about protein folding whatsoever, but (i hope) we all can recognize word salad from coherent text.

A shame that you’re being pushed away so often, I know that you make a lot of contributions to this space- so know that at the very least you are appreciated

I personally don’t see any long term benefit in AI in it’s current state, I’m not a fan of the outsourcing of human relationships or tasks that require mental exercise, experience, wisdom.

But perhaps one of our learned materialists on here can correct my prejudices towards it.

I wrote a lengthy essay on some of this if you want to read it https://en.prolewiki.org/wiki/Essay:Intellectual_property_in_the_times_of_AI

For outsourcing specifically, from the moment one uses a lighter to light a fire (instead of rubbing two sticks together) or opens a calculator app they are offloading tasks that require mental exercise, experience and wisdom. The abacus can also output a wrong number if you mess a step along the way - and yet people still used it extensively for centuries.

We outsource labor every day too. Not as in offshoring, but as in nobody lives in a vacuum outside of society. The food we eat is grown by others, packaged, shipped, and stocked on a shelf by a different person each step. The device we are writing and reading lemmygrad on was made by someone else. The LLM is not outsourcing human relationships because it’s not a human relationship; even people who use it as a stand-in for a human relationship know it’s not human. But, it solves problems.

Great essay, I appreciate your reply.

I would like to see a more thorough investigation or refutation regarding the potential AI has on cognitive atrophy. I don’t believe we can at all say that this potential was proven false since the advent of the internet- in fact I would say that the internet is a great example.

The issue I take is not with outsourcing in general, and of course no serious person could claim that one can live in a “vacuum outside of society”. But it is clear that people are using AI or attempting to use AI as a means to outsource mental tasks, and decision making that is endemic to the human experience / cognitive growth.

Can we compare a calculator to the purported uses- or even confirmed use cases of AI? I don’t believe so. The calculator is but a grenade when compared to AI’s nuclear potential.

Surface level investigations will reveal that irrespective of AI being the same as human relationships- humans will treat them that way. This sort of thing was demonstrated as early as the mid 20th century with the first chat-like computers.

Need we recirculate well circulated articles on the subject? Need we speak to the innumerable lonely people discussing their AI friend / partner replacement on reddit forums? Need we discuss this any further? Is there even a point in fighting against something that even the left adjacent uncritically support?

If you are at all content with the internet, or any of our other modern facets of entertainment- I don’t believe we will ever agree.

The calculator was also a nuclear device when compared to what it replaced/came before it. so was the car, and yet today nobody would tell you you can’t afford not to own a horse (except Homer Simpson maybe).

Things change and if we want to criticize that from a marxist perspective we have to offer something better than “I don’t like change”. It’s not all greener pastures with neural networks, but we need to be clear about what it is we criticize, and for that we need to understand things deeply.

But it is clear that people are using AI or attempting to use AI as a means to outsource mental tasks, and decision making that is endemic to the human experience / cognitive growth.

But what is the ‘human experience’? I push for people to define their words when it comes to talking about neural networks because more often than not it shows more similarities with what already exists than a break. It’s not that different from what we already live with daily. The more you use, understand and work with LLMs the more you realize that it’s really not so dissimilar from what we already know. You’re worried about a Wargames situation, i.e. the artificial intelligence making the logical conclusion that to win a nuclear standoff you should dump your warheads on the enemy first. But this has always been the plan; as soon as this technology was going to be available people were going to rush for exactly that - it just happened to happen in 2022 instead of 2065 or 2093, and so we have to reckon with the reality of it now, not later. Complaining that this is now possible won’t change that it exists and that it’s being used, so instead I made the choice to find my own uses out of LLMs that could be useful to communists (and incidentally I think we could probably organize for socialism much more efficiently around “the army wants to offload targets to an AI” than “AI bad destroy it all”). I’m not saying this to be dismissive, but rather that again we need to offer a studied, marxist perspective on the matter.

But speaking on the human experience/cognition, I mean, there are plenty of neurodivergent people who may not fare well with typical peer-to-peer communication (speech or written) and they appreciate having LLMs to organize and make sense of their thoughts and feelings. Disabled people have found answers from LLMs. Human cognition is not universal, and we see that LLMs already offer assistance there. When walkmans first came out, there was a huge panic around what they actually meant for society, that the youth saw them as a form of escapism, that it was an identity thing – it went so far that even novels were written about kids turning into mind-zombies after getting a walkman and some people event went on TV to say that using a walkman was a gateway to committing crime. We’re talking about the iPod that reads CDs.

I’m not even convinced by these studies that supposedly find all sorts of ills with usage of LLMs because I bet in just a few years plenty of errors will be found with them. They are lab studies, not real world, and I remember studies saying the same thing about search engines when they came out. I talked in another comment about how search engines are a memory bank for us; instead of remembering everything, we offload it to the search engine – I don’t necessarily remember what each property in CSS’s box-shadow does, but I know how to look that up on a search engine and find the information. Likewise we stopped remembering phone numbers the moment we got mobile phones (although we should probably remember one or two emergency numbers).

It sounds like individualism brain. People who want others to be a particular thing, live a particular way, but express no interest in helping to build a world where that is actually the case.

I couldn’t be friends with people who get annoyed of me wanting to talk to them.

They just hate AI, for whatever varied reasons, and don’t care to talk about it. They don’t have to like it, and they don’t owe anyone an explanation, so whatareyagonnado. I wouldn’t bother engaging, and I’d steer clear of communities that especially hate it. AFAIK dbzer0 is the only instance that’s explicitly pro-AI images.