See THIS POST

Notice- the 2,000 upvotes?

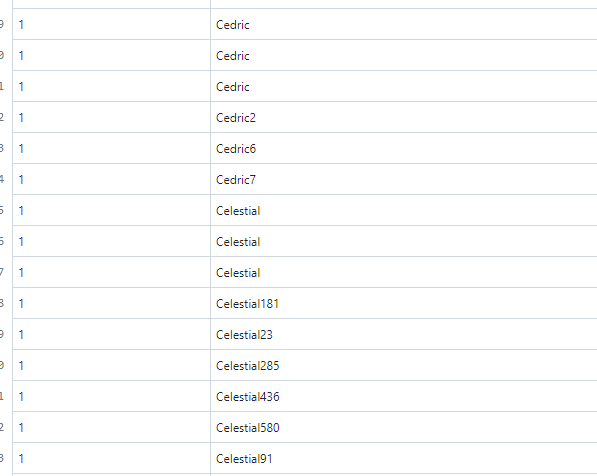

https://gist.github.com/XtremeOwnageDotCom/19422927a5225228c53517652847a76b

It’s mostly bot traffic.

Important Note

The OP of that post did admit, to purposely using bots for that demonstration.

I am not making this post, specifically for that post. Rather- we need to collectively organize, and find a method.

Defederation is a nuke from orbit approach, which WILL cause more harm then good, over the long run.

Having admins proactively monitor their content and communities helps- as does enabling new user approvals, captchas, email verification, etc. But, this does not solve the problem.

The REAL problem

But, the real problem- The fediverse is so open, there is NOTHING stopping dedicated bot owners and spammers from…

- Creating new instances for hosting bots, and then federating with other servers. (Everything can be fully automated to completely spin up a new instance, in UNDER 15 seconds)

- Hiring kids in africa and india to create accounts for 2 cents an hour. NEWS POST 1 POST TWO

- Lemmy is EXTREMELY trusting. For example, go look at the stats for my instance online… (lemmyonline.com) I can assure you, I don’t have 30k users and 1.2 million comments.

- There is no built-in “real-time” methods for admins via the UI to identify suspicious activity from their users, I am only able to fetch this data directly from the database. I don’t think it is even exposed through the rest api.

What can happen if we don’t identify a solution.

We know meta wants to infiltrate the fediverse. We know reddits wants the fediverse to fail.

If, a single user, with limited technical resources can manipulate that content, as was proven above-

What is going to happen when big-corpo wants to swing their fist around?

Edits

- Removed most of the images containing instances. Some of those issues have already been taken care of. As well, I don’t want to distract from the ACTUAL problem.

- Cleaned up post.

This is troubling.

At least we have the data though, hopefully these findings are useful for updating the Fediseer/Overseer so we can more easily detect bots

I really wish we would have a good data scientist, or ML individual jump in this thread.

I can easily dig through data, I can easily dig through code- but, someone who could perform intelligent anomaly detection would be a god-send right now.

There are data scientist around and we are monitoring where this goes.

Bigest problem I currently see is how to effectively share data but preserve privacy. Can this be solved without sharing emails and ip addresses or would that be necessary? Maybe securely hashing emails and ip addresses is enough, but that would hide some important data.

Should that be shared only with trusted users?

Can we create dataset where humans would identify bots and than share with larger community (like kaggle), to help us with ideas.

There are options and will be built, just jt can not happen in few days. People are working non stop to fix (currently) more important issues.

Be patient, collect the data and let’s work on solution.

And let’s be nice to each others, we all have similar goals here.

So- email addresses and instances are actually only known by the instance hosting the user. That data is not even included in the persons table. Its stored in the local_user table, away from the data in question. As such- it wouldn’t be needed, nor, included in the dataset.

Regarding privacy- that actually isn’t a problem. On lemmy, EVERYTHING is shared with all federated instances. Votes, Comments, Posts. Etc. As such- there isn’t anything I can share from my data, that already isn’t also known by many other individuals.

Absolutely. We can even completely automate the process of aggregating and displaying this data.

db0 also had an idea posted in this thread- and is working on a project to help humans vet out instances. I think that might be a start too.

That sounds great and at least we can try something and learn what can or can not be done. I am totally interested in working on bot detection.

I know that emails remain locally, but those can also be important part of pattern detection, but it has to be done without them.

Fediseer sounds great, at least building some in instances.

I am more thinking on votes, comments and post detection from individual accounts in which fediseer would be quite important weight.

The best approach might be to work on a service intended to run locally besides lemmy-

That way- data privacy isn’t a huge concern, since the data never leaves the local server/network.