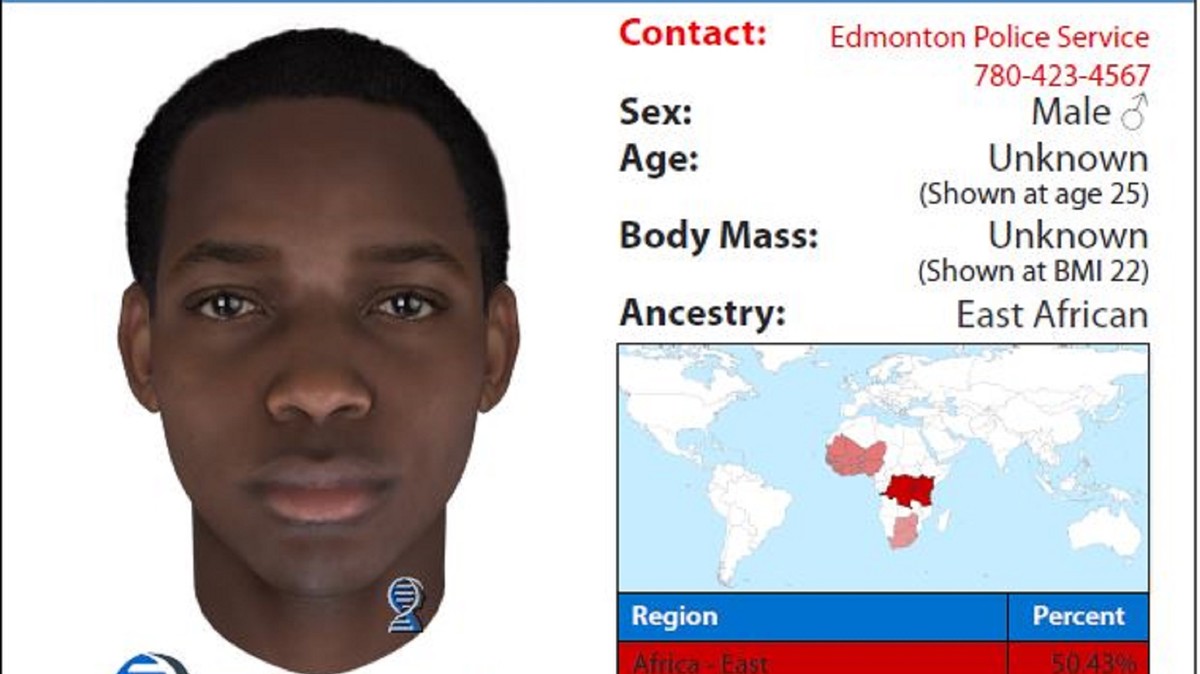

And of course they show the image of a black guy as a demostration…

It’s coming right for us!

“Can AI be racist?”

Sure it can! If the people programming them are racist!

@AgreeableLandscape @OptimusPrime AI can’t be racist by design, it’s a machine.

AI trained on racist data will mirror racism of the input dataset.

Imagine that you create an AI to determine if someone is lying based on a video. If that dataset is human-curated and is labeled with racist tendencies (for example people who look a certain way are labeled as lying more even if that isn’t the truth) then the AI will learn that.

But even a perfectly true dataset can train a racist AI. Imagine that the previous dataset only has lying examples for people who look a certain way (or the vast majority of those examples are lying) whereas another group of people is only lying 10% of the time. The AI will probably extrapolate that all of the first group are lying because they have seen no (or few) counterexamples.

You did not get the question, my guy

The dogs at Nazi death camps were trained to attack the prisoners. Did the dogs have some innate hatred for jews or slavs? No. But their owners did and they transferred that to the dogs. But in the end, it doesn’t matter if the dogs personally hated them or not, they still mauled them.

It’s the same with AI. It doesn’t know what a black person is, because it’s a piece of software that doesn’t have human consciousness, but if black people are disproportionately getting falsely indicted at the suggestion of the AI because it was programmed with a bias, it doesn’t matter what the AI knows or doesn’t know, the effect is the same either way, and yes, the people making it were racist, and so are the people heeding it if they keep heeding it after knowing about the bias.