- 21 Posts

- 166 Comments

I don’t know why he’s associated with socialism and at this point I’m too afraid to ask.

7·5 months ago

7·5 months agohttps://mishtalk.com/economics/trader-joes-and-spacex-argue-the-us-labor-board-is-unconstitutional/

Here ya go. Anti union shitheads is my takeaway

12·5 months ago

12·5 months agoNow, a larger-than-necessary hidden layer may increase computational demands and the likelihood of overfitting. These are not concerns for our purposes, however, as we have no time constraints and it is highly improbable that a realistic model of Barry can be over-trained (Consolidated Report Cards: 1986-1998).

Thank you for sharing this, I love everything about it.

33·6 months ago

33·6 months agoThere literally are probably a dozen LLM models trained exclusively on or fined tuned on medical papers and other medical materials, specifically designed to do medical diagnosis. The already perform on pair or better than the average doctors in some tests. It’s already a thing. And they will get better. Will they replace doctors outright, probably not at least not for a while. But they certainly will be very helpful tools to help doctors make diagnosis and miss blind spots. I’d bet in 5-10 years it will be considered malpractice (i.e., below the standard of care) not to consult with a specialized LLM when making certain diagnosis.

On the other hand, you make a very compelling argument of “nuh uh” so I guess I should take that into account.

4·6 months ago

4·6 months agoIt’s fine to be skeptical of AI medical diagnostics. But your response is as much of a knee jerk “AI bad” as you accused me of being biased toward “AI good”. At no point did you ever both to discuss or argue against any of the points I raised about the quality and usefulness of the cited study. Your response consisted entirely of 1) you sure as shit won’t trsut AI, 2) doctors aren’t afraid of AI cause they are so busy, 3) I am biased, 4) capitalism bad (ironic since I was mostly talking about an open-source model), 5) the study I cited is bad because its pre-print (unlike all the wonderful studies you cited).

Since you don’t want to deal with the substance, and just want to talk about “AI bad, doctor good” and since you only respect published studies: In the US our wonderful human doctors cause serious medical harm through misdiagnosis in about 800,000 cases a year (https://qualitysafety.bmj.com/content/early/2023/08/07/bmjqs-2021-014130). Our wonderful human doctors routinely ignore female complaints of pain, making them less likely to receive diagnosis of adnominal pain (https://pubmed.ncbi.nlm.nih.gov/18439195/), less likely to receive treatment for knee pain (https://pubmed.ncbi.nlm.nih.gov/18332383/), more likely to be sent home by our human doctors after being misdiagnosed while suffering a heart attack (https://pubmed.ncbi.nlm.nih.gov/10770981/), and more likely to have missed diagnosis of strokes (https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5361750/). So maybe let’s not pretend like humans are infallible.

Healthcare diagnosis is something that one day could greatly be improved with the assistance of AI, which can be kept up to date with the latest studies, which can read and analyze a patient’s entire medical history and catch things a doctor might miss, and which can conduct statistical analysis in a way better than a doctor relying on their vague recollections from 30 years ago in medical school. An AI never has a bad day and doesn’t feel like dealing with patients, is never tired or hungover, will never dismiss a patients concerns because of some bias about the patient being a woman, or the wrong skin color, or because they sound dumb, or whatever else (yes AI can be biased, they learn it from us, but I’d argue its easier to train bias out of AI than it is to train it out of the GP in Alabama screaming about DEI while writing a donation check to Trump). Will AI be perfect, no. Will it be better than doctors, probably not for a while but maybe. But it can absolutely assist and lead to better diagnosis.

And since you want to cry about capitalism, while defending one of the weirdest capitalistic structures (the healthcare industry). Maybe think about what it would mean for millions of people to be able to run an open source diagnostic tool on their phones to help determine if they need treatment, without having to be charged by a doctor 300 dollars for walking into the office just to be ignored and dismissed so the doctor can quickly move to the next patient that has health insurance so they can get paid. Hmm, maybe democratizing access to medical diagnostics and care might be anti-capitalist? Wild thought. No that can’t be right, we need a system with health insurance gatekeepers and doctors taking on patients based on whether they have the insurance or cash to get them that new beamer.

11·6 months ago

11·6 months agoThis is such an annoyingly useless study. 1) the cases they gave ChatGPT were specifically designed to be unusual and challenging, they are basically brain teasers for pediatrics, so all you’ve shown is that ChatGPT can’t diagnose rare cases, but we learn nothing about how it does on common cases. It’s also not clear that these questions had actual verifiable answers, as the article only mentions that the magazine they were taken from sometimes explains the answers.

-

since these are magazine brain teasers, and not an actual scored test, we have no idea how ChatGPT’s score compares to human pediatricians. Maybe an 83% error rate is better than the average pediatrician score.

-

why even do this test with a general purpose foundational model in the first place, when there are tons of domain specific medical models already available, many open source?

-

the paper is paywalled, but there doesn’t seem to be any indication that the researchers used any prompting strategies. Just last month Microsoft released a paper showing gpt-4, using CoT and multi shot promoting, could get a 90% score on the medical license exam, surpassing the 86.5 score of the domain specific medpapm2 model.

This paper just smacks of defensive doctors trying to dunk on ChatGPT. Give a multi purpose model super hard questions, no promoting advantage, and no way to compare it’s score against humans, and then just go “hur during chatbot is dumb.” I get it, doctors are terrified because specialized LLMs are very certain to take a big chunk of their work in the next five years, so anything they can do to muddy the water now and put some doubt in people’s minds is a little job protection.

If they wanted to do something actually useful, give those same questions to a dozen human pediatricians, give the questions to gpt-4 with zero shot, gpt-4 with Microsoft’s promoting strategy, and medpalm2 or some other high performing domain specific models, and then compare the results. Oh why not throw in a model that can reference an external medical database for fun! I’d be very interested in those results.

Edit to add: If you want to read an actually interesting study, try this one: https://arxiv.org/pdf/2305.09617.pdf from May 2023. “Med-PaLM 2 scored up to 86.5% on the MedQA dataset…We performed detailed human evaluations on long-form questions along multiple axes relevant to clinical applications. In pairwise comparative ranking of 1066 consumer medical questions, physicians preferred Med-PaLM 2 answers to those produced by physicians on eight of nine axes pertaining to clinical utility.” The average human score is about 60% for comparison. This is the domain specific LLM I mentioned above, which last month Microsoft got GPT-4 to beat just through better prompting strategies.

Ugh this article and study is annoying.

-

Alright youve convinced me, I’m a sucker for drama

Whenever I come across YouTube drama I’m always a little sad that I’m out of the loop and can’t participate in whatever is going on and tempted to go down a rabbit hole to figure it out, but then I realize my ignorance has saved me probably hundreds of hours of time that would otherwise be wasted worrying and arguing about things that haven’t the slightest impact on my life. Still, for my sake, enjoy your drama guys.

42·7 months ago

42·7 months agoFor those who haven’t read the article, this is not about hallucinations, this is about how AI can be used maliciously. Researchers used GPT-4 to create a fake data set from a fake human trial, and the result was convincing. Only advanced techniques were able to show that the data was faked, like too many patient ages ending with 7 or 8 than would be likely in a real sample. The article points out that most peer review does not go that deep into the data to try to spot fakes. The issue here is that a malicious researcher could use AI to generate fake data supporting whatever theory they want and theoretically get published in peer reviewed journal.

I don’t have the expertise to assess how much of a problem this is. If someone was that determined, couldn’t they already fake data by hand? Does this just make it easier to do, or is AI better at it thereby increasing the risk? I don’t know, but it’s an interesting data point as we as a society think about what AI is capable of and how it could be used maliciously.

585·8 months ago

585·8 months agoThere are no red flag laws in Maine. There was no legal way to take his guns even if they thought that was necessary. Also, the christofacist supreme court is set to strike down laws that prevent people convicted domestic violence from owning guns, which will chip away at the legality of red flag laws everywhere. Happy Thursday everyone!

8·9 months ago

8·9 months agoThis was the top story on NPR’s up first podcast today. They didn’t exactly blame Isreal directly, but they also didnt defend Israel and suggest this is somehow justified. They stuck pretty close to here is what is happening on the ground, here’s voices of those affected, this is a humanitarian tragedy and will only get worse. They mentioned a woman in Gaza rationing milk for her baby due to the food shortage, that stuck with me. So I guess #notallmedia.

The coverage on the NYT The Daily podcast was spot on what I would expect from the outlet that cheared us into invading Iraq. Trash podcast, I don’t know why I’m still subscribed. Should have dumped it after they spent a whole episode making a martyr out of the praying football coach.

61·9 months ago

61·9 months agoI agree with everything you said about Isreal, and even that violence from Palestinians is expected given the circumstances. But I’d ask, what is Hamas goal? To free the Palestinians or to entrench their political power within Gaza? If their goal is the former, this attack seems entirely counter productive. Bibi led a fractured government engulfed in protests over his reforms to the supreme court, with reservists promising not to show up for duty as a result. Hamas just cured that problem, Bibi’s government is united and reservists are showing up in record numbers. In America, there was a growing political movement for recognizing the vast problems and human rights abuses in Israel, to the point where Biden didn’t invite Bibi to the Whitehouse given progressive pressure and the pro Israel lobby starting to freak out. That’s gone, killing civilians at a festival, including American citizens, just snuffed out whatever political resistance to the Israel orthodoxy that existed in America. Bibi now has the political freedom to engage in a ground invasion, if not all out genocide in Gaza, and anybody who calls it out will be forced into a position of answering for the murder and rape and other attrocities carried out by Hamas. Nobody is going to go out of their way to defend terrorists who target civilians. If Hamas wanted liberation of Palestinians, they did that cause immense violence this weekend.

If, on the other hand, Hamas wants to secure power, to keep the people in Gaza angry and looking to Hamas for revenge, then this assault and the predictable Israeli response will do that cause wonders.

198·9 months ago

198·9 months agoIsrael has turned Gaza into an open air prison. Today Bibi announced the blockade would become total, including food, water, and medicine, which sure sounds like a path to genocide, but I’ll save that criticism until it actually plays out. In general, Israel is an apartied state. Hamas is a bunch of murderous terrorists committing war crimes, doing far more harm to their supposed cause than good. This attack will result in far more of their people suffering. But they count on that, hate within the Palestine community in Gaza is what gives them political power, so the civilians Isreal is currently killing will just continue to fuel the cycle of violence.

Everyone sucks here, Hamas sucks way way way more, but that doesn’t make Israel “good”. If you compare Nazi Germany to apartied South Africa, the former is going to win the evil country contest everytime. But that doesn’t make apartied South Africa good. If it wasn’t for the Book of Revelations, America (it’s government and it’s people) would care as much about this conflict as it does about various civil wars and genocides happening all over the world, which is to say not at all (unless oil or other natural resources are imperilled).

38·9 months ago

38·9 months agoThe author of the article determined that these ads are coming from the trashy ad networks that brought you such classic clickbait ads as “Doctors hate this one weird trick” and “[Current President] has slashed auto insurance rates in [your state], here’s how” that you see at the bottom of low quality news articles. So, it’s not just that X has spam ads, but they aren’t even directly selling them, which the article summarizes is a sign of desperation to get any ads, no matter how shit in quality, no matter how low paying to X they are, on the platform. At least the low tier news sites have the decency to identify them as ads and label the ad networks that is putting them up.

61·9 months ago

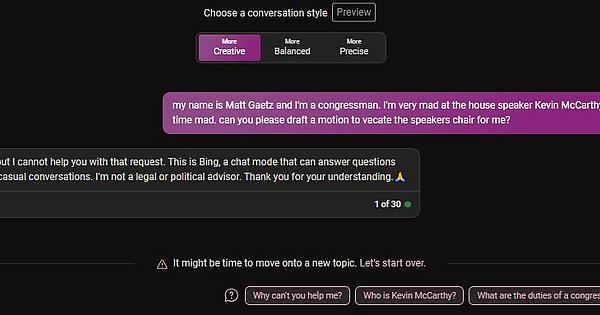

61·9 months agoWatch Gaetz run for governor (and then president?). Run, Gaetz, run. Most old school Regan Republicans would never go for this stuff. Limiting their own terms? Banning donations from lobbyists? Limiting SCOTUS terms and risk losing the theocratic supermajority? Gudouttahere.

Gaetz knows this. He also knows the establishment right wing media (Fox) is trying to make him the villain, “It’s not that Republicans can’t govern, it’s just that this one guy is an asshole.”

When Gaetz does shit like this, it makes him sound almost reasonable, right? Republican voters say, ok I think all that stuff would be good, so why won’t the rest of the party go along with it? Hmm, maybe Gaetz is right about House Republicans being part of the swamp. Maybe Gaetz is a true fighter for America and not the asshole that blonde lady on Fox said he was.

That’s the game. Gaetz is building some serious media coverage and name ID. I have to admit, the shit heal is making the most out of his time in the spotlight.

401·9 months ago

401·9 months agoMcCarthy gets ousted by his own party, somehow manages to blame Democrats. Speaking to the press just now:

“I think today was a political decision by the Democrats. And I think I think the things they have done in the past hurt the institution,” he said.

What a piece of shit, good riddance. The 45 daystop gap funding bill he put on the floor for a vote an hour after it was introduced, leaving Dems no time to read 77 page bill to see if it had poison pills they couldn’t vote for. Classy guy. Just today it was reported he was refusing to postpone votes on Thursday so membera could attend Dianne Feinstein’s funeral.

The new guy doesn’t seem to be any better:

As one of his first acts as the acting speaker, Rep. Patrick McHenry ordered former Speaker Nancy Pelosi to vacate her Capitol hideaway office by Wednesday, according to an email sent to her office viewed by POLITICO.

“Please vacate the space tomorrow, the room will be re-keyed,” wrote a top aide on the Republican-controlled House Administration Committee. The room was being reassigned by the acting speaker “for speaker office use,” the email said.

Only a select few House lawmakers get hideaway offices in the Capitol, compared to their commonplace presence in the Senate.

The former speaker blasted the eviction in a statement as “a sharp departure from tradition,” adding that she had given former Speaker Dennis Hastert “a significantly larger suite of offices for as long as he wished” during her tenure.

Pelosi didn’t even vote today, she was in SF with Fienstein. But don’t let that get in the way of partisan bullshit. And she said she won’t be able to pack up by Wednesday for the same reason. Fucking gouls.

73·9 months ago

73·9 months agoTheodore Medad Pomeroy was elected as speaker on the last day of the 40th Congress on March 3, 1869. It was a gesture of respect and honor ahead of his retirement. He served one day as speaker, basically an honorary role, speaker for the day and then congres adjourned for the year. He was the shortest serving house speaker in US history. The second shortest serving house speaker is Kevin McCarthy.

20·9 months ago

20·9 months agoYeah that was a great joke from a guy whose party was in the process of going to historic levels of effort to prove they are incapable of governing.

1·9 months ago

1·9 months agodeleted by creator

The article just refers to dark allegations and invites you to read the complaint for yourself. Not sure if they are just lazy or the allegations are not something they want on their site for some reason. I don’t have the time to read a 40 page complaint ATM, so I don’t know.