I asked GPT4 to refactor a simple, working python script for my smart lights… and it completely butchered the code and apologized mid-generation.

No amount of pleading or correction would get it to function as it did just a week or two ago.

It is so over.

Gpt4 is not good at writing code. I think it’s because it has a lower token limit. Ask Gpt 4 to write out detailed specs for the code you want, then copy and paste that into a Gpt-3.5 session and ask it to write the code

And if it gets cut off, paste in the last line it output successfully and ask it to continue with the line following that one. Then just copy and paste the blocks together

I noticed this today working on some bash scripts. Compared to a few weeks ago it’s become noticeably dumber, but also faster.

They must be understandably desperate to burn less cash with each API call.

Use the playground.

Playground ?

https://platform.openai.com/playground

You can adjust the settings.

Oh great, so now I have to pay on top of my Plus membership?

A couple of prompts already cost be $0.03. I could easily run up hundreds of dollars if I used it as much as I have in the past.

Do you have any tips or preferring settings when using the Playground to code?

It actually works out cheaper in most cases to use the playground over actually paying for chat gpt plus

It does not add up that much unless you’re tokenizing a metric ton of content. It’s a few extra bucks and you can set a rate limit. I have rarely hit a 5 dollar mark with consistent writing. Limit it to a fiver and see how it goes. As for the settings, it is really model specific. There are a ton of guides out there, though for effective instructing. I believe Wolfram Alpha has a few. Their formatting is nice: https://medium.com/machine-minds/chatgpt-python-machine-learning-prompts-abefc544412c Playground pricing is what they use for commercial API use. It has to be affordable.

I noticed this today, too. Yesterday I was able to feed it pastebin and github links, today I had to copy/paste everything. Code output was broken. Still works for comments and unit tests.

It’s moderately good at in-line commenting functions and creating full function doc comments for the specific language / documentation format you need, but its code generation abilities are still not game-changing. Getting it to generate anything longer than a few helper functions is a test of patience.

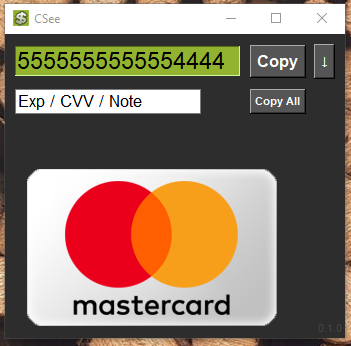

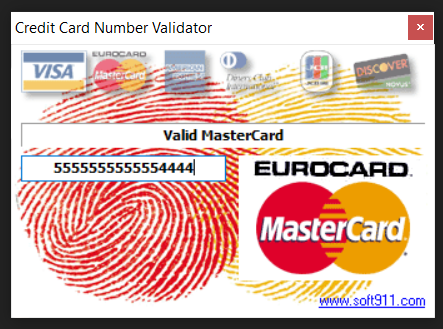

This wasn’t always this case. I had zero Python experience a month ago, and managed to make a 300 line Python script that checks credit card validation, and has a beautiful UI. This would be impossible today.

You said “beautiful” but I’m not sure you are using that word right.

Considering how long I’ve been using Python, and how it looked when I started, it is to me. And here is the ancient one I was previously using:

They had to make it too dumb to draw Disney Characters… you think I’m joking, try getting it to render a disney character in SVG or javascript…

Microsoft bought it. They’re not going to let their paying userbase of millions of coders evaporate…

Microsoft wants to own tools crucial to the mainstream of software development. They also want to own the cloud infrastructure on which those tools depend. Today, they might lose dimes on every LLM call. In five years, they’ll make a penny on orders of magnitude more calls. Microsoft has many flaws, including cloud capacity, but they aren’t short-sighted about investment. (I used to work in DevDiv and Azure Machine Learning.)

It’s Microsoft. Expecting them to make good and logical decisions is completely delusional.

Good and logical decisions are plausible. However, expecting Microsoft to make consistent decisions and be able to work as a single cohesive team, now that’s delusional.

People were saying the exact same thing a few weeks ago, and have been ever since it came out basically.

You having issues with one prompt or one conversation doesn’t mean it’s dumb now.

Still working fine for me.

Blowing up on reddit as well and tons of people agree: https://www.reddit.com/r/ChatGPT/comments/14ruui2/i_use_chatgpt_for_hours_everyday_and_can_say_100/?sort=new

Again, people were saying the exact same things over a month ago about how it had changed since a month ago at that point.

I’ve never had this issue until the last couple days.

Which just kinda proves my point that it probably didn’t change that much, cause people were saying what you are saying months ago.