I was confused why it would consume anything in the first place (instead of just use the same water), but the PDF page 4 explains it: the water is “consumed” when it evaporates in the open loop part of the cooling system (and also for other reasons).

it just goes to show that it’s important to check your AIs for rot regularly! /ref

It has just learned that it is important to stay hydrated

So if it’s using water for evaporative cooling, which generally doesn’t give a shit about water quality, any word on what kind of water and where geographically it’s located? Because there’s a huge difference between using up clean tap water in California during a drought vs using minimally filtered river water in Florida during monsoon season, vs using raw sea water, vs using treated sewage and wastewater that needs to be discharged far from human settlements anyway if it wasn’t being evaporated in a data center.

I don’t even like OpenAI due to their other practices and am not defending them, but this seems like one of the less significant things to freak out over compared to the other shit they’ve pulled. If anything you should be worried way more about how much energy they use and whether that energy comes from fossil fuels.

I hate these clickbait articles.

- “Training GPT-3 consumed as much water as producing 370 BMWs”

- “Bitcoin mining consumes as much electricity as Country X”

- “One Google search consumes X amount of energy”

Yeah, okay. So what? What am I supposed to learn from that. Is that a lot, is that a little? These kinds of articles never discuss how much or how little utility is derived

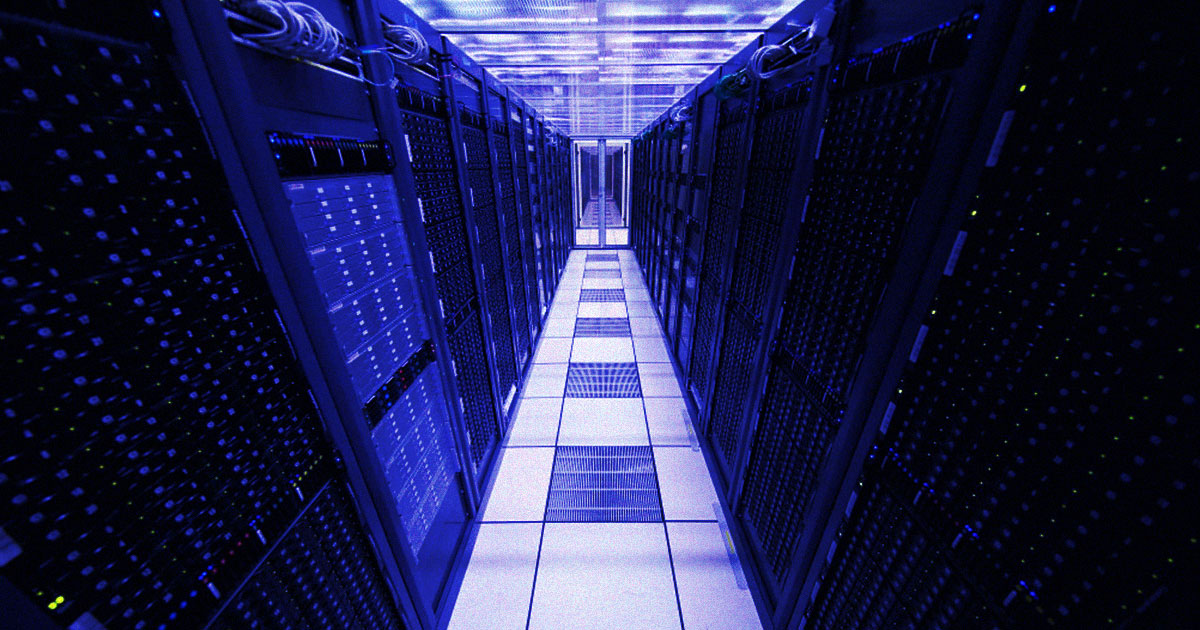

So datacenters consume electricity and water, just like many other industrial processes. And this is interesting how exactly? They say that training GPT-3 required as much water as producing 370 BMW cars, but they don‘t discuss which